Verified partisan accounts follow sketchy user with a history of boosting foreign disinformation

The tweet receiving the most attention in a hashtag network had been boosted by an user with a history of promoting foreign disinformation. Why are prominent figures following it?

A hashtag analysis led Hoaxlines to take a closer look at users within the network. A key account had a history of boosting talking points about Syria associated with the Kremlin in 2013 and claims about emails in 2016. The account was likely operated by a human at least part of the time, but it also has displayed bot-like behavior. It’s unclear why so many verified GOP accounts follow this seemingly insignificant account, along with a congressman and a wealthy tech mogul.

The FJBiden hashtag network

Tonight's analysis raised more questions than it answered. Hoaxlines watched tweets under the query “FJB” OR “FJBiden” OR “LetsGoBrandon” because we have observed inauthentic activity under similar hashtags.

The activity centered around one tweet to an extreme degree. The tweet receiving the most attention received over 9600 instances of incoming engagement like a retweet, reply, or quote, while the second place tweet received fewer than 1500 incoming engagements.

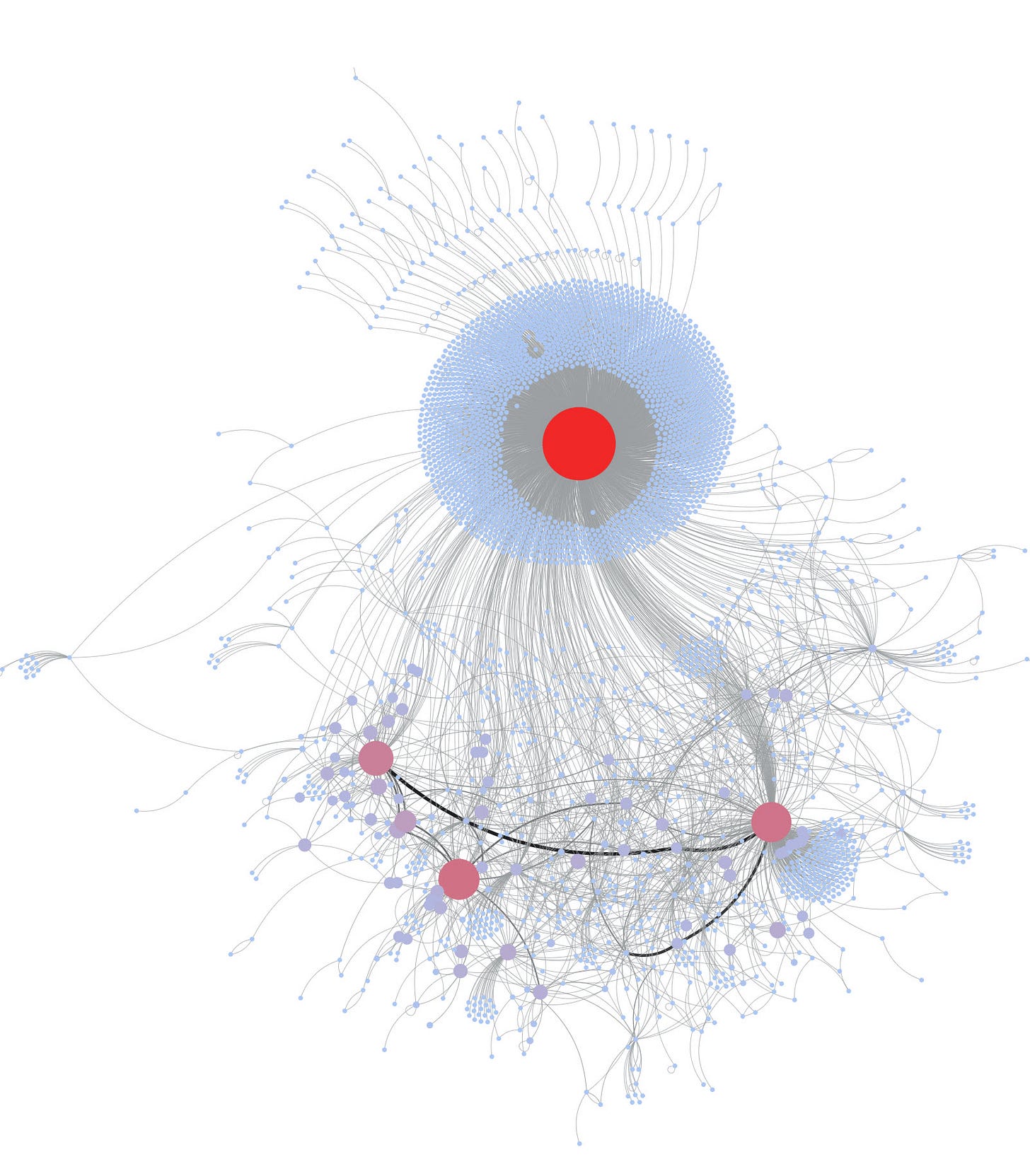

Hoaxlines selected the tweet with over 9600 incoming engagements for closer analysis and then visualized the data in a tweet reply network (See Figure 1).

Just outside the tweet was a network of accounts generating engagement. Hoaxlines ran Botometer scoring on only the top 100 most active accounts in this tweet reply network.1 Still, we sized and colored the nodes in the first graph for Botometer scoring, showing just how many in the top 100 exhibited bot-like behavior. Nodes with light blue coloring had a low or no score; nodes that leaned more toward bright red had a high score. A high score means the account behaves less like a genuine user and more like one trying to manipulate the platform.

Among the top 100 most active, only 15 received a Botometer score that indicated it was more likely to be a genuine account than it was to be an inauthentic account — which doesn’t necessarily mean automated. People operating accounts can and do manipulate platforms, in violation of Twitter’s policies.

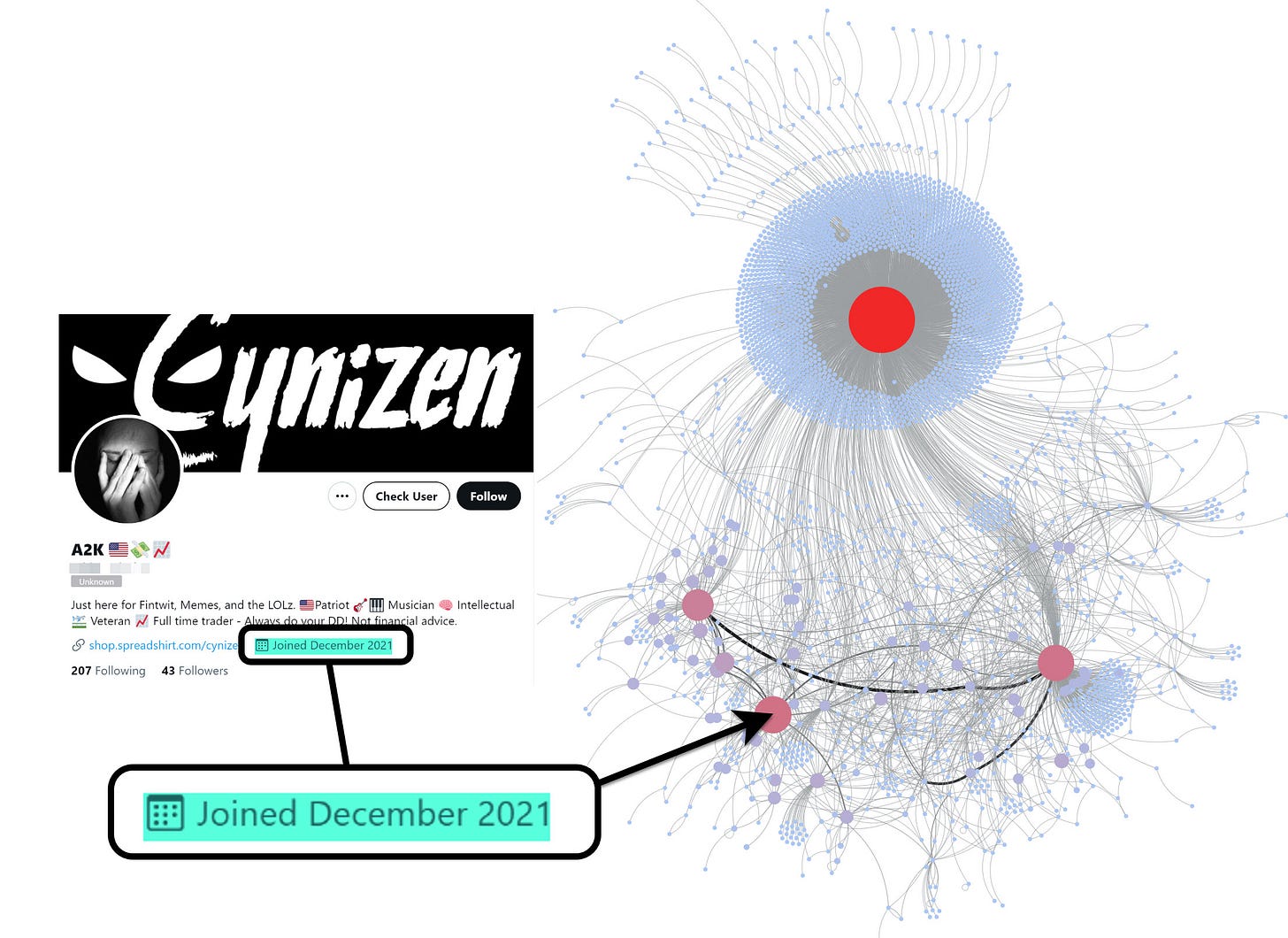

The second graph (Figure 2) shows the nodes colored and sized based on eigenvector centrality. This metric roughly shows the influence of a user in this network. The node coloring goes from light blue (low influence) to bright red (high influence). This means a small node that is light blue has little influence within the network and a large node that is bright red is highly influential.

Examining individuals in the network

Hoaxlines began by examining the users that the graph indicated were highly influential. The first account had a December 2021 creation date. Recent creation dates, especially a higher than expected number in a network, can be a strong indicator of an attempt to manipulate a platform.

The second account we examined was much older, which meant it had a history. Hoaxlines decided to see what could be learned from this account’s past behavior.

We pulled the user’s like history and found very few likes, especially given the age of the account. Deleting account histories is common among operations trying to cover their tracks, so it’s entirely possible that there were more likes or posts in the past. The data as it was in December 2016 showed just one like for 2016 that read “Video: Inside the Clinton network of John Podesta.”

The posting account was Wikileaks, an organization with a history of leaking documents. Per NBC News the leaks have included:

WikiLeaks released hundreds of thousands of U.S. military documents and videos from the Afghan and Iraq wars, including the so-called Collateral Murder footage and “Iraq War logs.”

They also released a trove of more than 250,000 State Department diplomatic cables in 2010. Former U.S. soldier Chelsea Manning was convictedof leaking the documents.

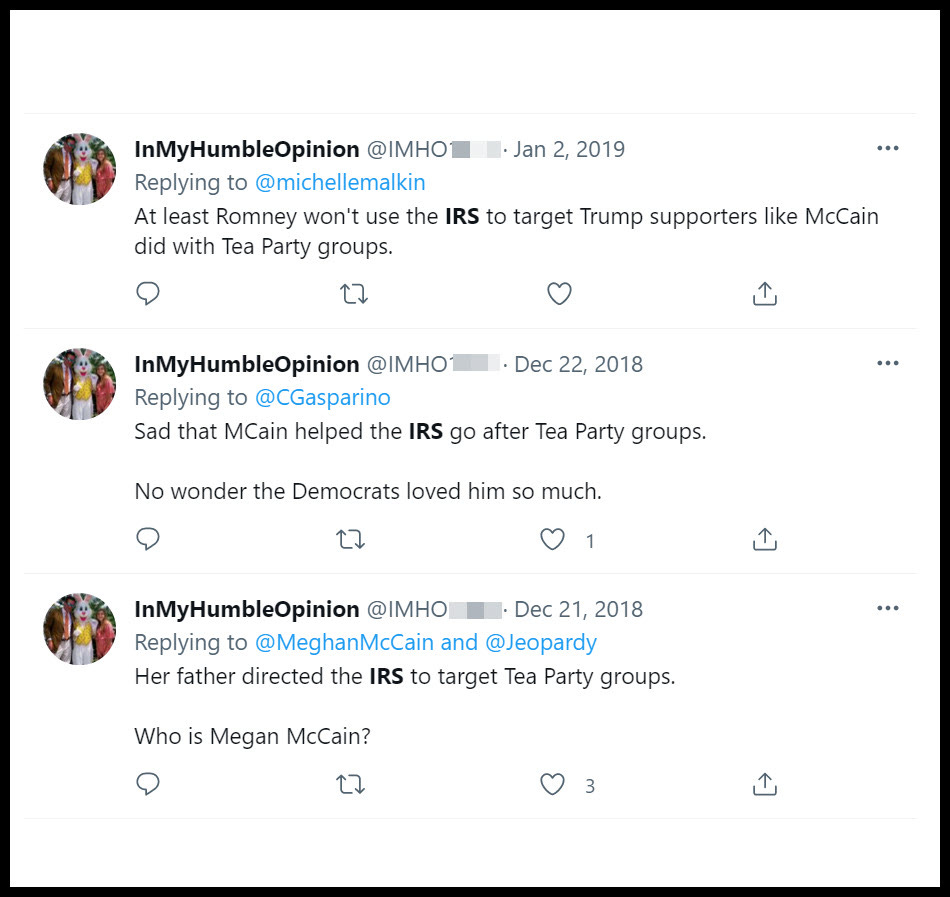

The account IMHO#### tweeted about emails beyond those referencing the 2016 election. For the year 2014, the user liked six tweets. One tweet from Breitbart contained a link to a now-deleted article that was likely about the IRS. Searching the unique identifier in the web address returned articles targeted them.

Internal IRS documents had warned employees about cases where political groups sought tax-exempt status, and the IRS characterized groups containing "tea party” as having a potential for abuse. Although the narrative in media at the time only discussed targeting of conservatives, investigations revealed that the IRS had also screened groups that contained “progressive.” Still, the account periodically went on posting frenzies about this talking point, like in 2018 and 2019.2

Repetitive tweeting was common in IMHO####’s Twitter feed. Sometimes the user would post the same text or link over and over and over— with zero engagement. Genuine accounts typically do not share the same link repeatedly nor do they keep posting at a high rate when engagement is nearly nonexistent.3

In 2013, the account discussed the usage of chemical weapons in Syria. We did not find the account in the Information Operations Archive.

For the last 2200 tweets, IMHO####, had a 100% reply rate, meaning there were no original tweets, quote tweets, retweets, only replies.

TruthNest assessed a 90% probability this account is a “bot.” It’s not clear precisely how they define bot, however, the traits listed as the rationale for the percentage are traits that research has associated with inauthentic accounts.4 IMHO####’s assessment was based on the account having a timezone that differs from the one listed for it, spam-posting, silence for long periods of time as is common with inauthentic accounts, and suspicious followers, which are followers that exhibit many traits associated with inauthentic activity.

TruthNest is an app designed to help identify true influencers and to detect bots and trolls with “enhanced profiling” based on account activity. The app is free to use.

Who follows the account?

We examined the accounts IMHO#### followed and those that followed it. The account followed back just 36 of its followers. Among this select group were verified accounts for the Oversight Committee Republicans (GOPoversight), Senate Republicans (SenateGOP), a congressman (DarrelIssa), Michael Caputo (MichaelRCaputo), and John McAfee (OfficialMcAfee). With the exception of MichaelRCaputo, these accounts had likely not recently followed IMHO####.

Account followers are listed in the order they start following a user, so one can see where a specific user falls on the follower’s timeline. The GOPoversight, SenateGOP, and OfficialMcAfee were much closer to the first followers than they were to the recent followers, but this must be viewed with the limitation of our dataset, which was collected in December 2021.

Inauthentic accounts sometimes remove followers or wipe an account of tweets and other information before rebranding the account as something new. Still, the presence of these followers begged questions, some of which Hoaxlines could not answer.

The tweet that received 9600 engagements

As promised, the tweet isolated from the original hashtag data as receiving over 9600 engagements when the second most engaged tweet had fewer than 1500.

Botometer is a project of the Observatory on Social Media (OSoMe, pronounced "awesome") at Indiana University. OSoMe is a collaboration between the Network Science Institute (IUNI), the Center for Complex Networks and Systems Research (CNetS), and the Media School at Indiana University. The following individuals have contributed to this project: Clayton A Davis, Onur Varol, Kaicheng Yang, Mohsen Sayyadi, Ben Serrette, Emilio Ferrara, Alessandro Flammini, and Filippo Menczer.

@DFRLab. “#BotSpot: Twelve Ways to Spot a Bot.” DFRLab, 28 Aug. 2017, https://medium.com/dfrlab/botspot-twelve-ways-to-spot-a-bot-aedc7d9c110c.

Anise, Olabode, and Jordan Wright. “Anatomy of Twitter Bots: Amplification Bots.” Duo Labs, 2018, https://duo.com/labs/research/anatomy-of-twitter-bots-amplification-bots.

Antenore, Marzia, et al. “A Comparative Study of Bot Detection Techniques Methods with an Application Related to Covid-19 Discourse on Twitter.” arXiv [cs.SI], 1 Feb. 2021, http://arxiv.org/abs/2102.01148. arXiv.

Beatson, Oliver, et al. “Automation on Twitter: Measuring the Effectiveness of Approaches to Bot Detection.” Social Science Computer Review, SAGE Publications Inc, Aug. 2021, p. 08944393211034991.

Caldarelli, Guido, et al. “The Role of Bot Squads in the Political Propaganda on Twitter.” Communications Physics, vol. 3, no. 1, Nature Publishing Group, May 2020, pp. 1–15.

Cresci, Stefano. “A Decade of Social Bot Detection.” Communications of the ACM, vol. 63, no. 10, Association for Computing Machinery (ACM), Sept. 2020, pp. 72–83.

Ferrara, Emilio, et al. “The Rise of Social Bots.” Communications of the ACM, vol. 59, no. 7, Association for Computing Machinery, June 2016, pp. 96–104.

Gilani, Zafar, et al. “A Large-Scale Behavioural Analysis of Bots and Humans on Twitter.” ACM Trans. Web, vol. 13, no. 1, Association for Computing Machinery, Feb. 2019, pp. 1–23.

Giorgi, Salvatore, et al. “Characterizing Social Spambots by Their Human Traits.” Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Association for Computational Linguistics, 2021, pp. 5148–58.

Hajli, Nick, et al. “Social Bots and the Spread of Disinformation in Social Media: The Challenges of Artificial Intelligence.” British Journal of Management, Wiley, Oct. 2021, doi:10.1111/1467-8551.12554.

Himelein-Wachowiak, Mckenzie, et al. “Bots and Misinformation Spread on Social Media: Implications for COVID-19.” Journal of Medical Internet Research, vol. 23, no. 5, May 2021, p. e26933.

Luceri, Luca, et al. “Down the Bot Hole: Actionable Insights from a 1-Year Analysis of Bots Activity on Twitter.” arXiv [cs.SI], 29 Oct. 2020, http://arxiv.org/abs/2010.15820. arXiv.

Rauchfleisch, Adrian, and Jonas Kaiser. “The False Positive Problem of Automatic Bot Detection in Social Science Research.” PloS One, vol. 15, no. 10, Oct. 2020, p. e0241045.

Schuchard, Ross, et al. “Bots in Nets: Empirical Comparative Analysis of Bot Evidence in Social Networks.” Complex Networks and Their Applications VII, Springer International Publishing, 2019, pp. 424–36.

Sheridan, Kelly. “Spot the Bot: Researchers Open-Source Tools to Hunt Twitter Bots.” Dark Reading, 6 Aug. 2018, https://www.darkreading.com/threat-intelligence/spot-the-bot-researchers-open-source-tools-to-hunt-twitter-bots.

Spotting Bots, Cyborgs and Inauthentic Activity. 13 Mar. 2020, https://datajournalism.com/read/handbook/verification-3/investigating-actors-content/3-spotting-bots-cyborgs-and-inauthentic-activity.

Stella, Massimo, et al. “Bots Increase Exposure to Negative and Inflammatory Content in Online Social Systems.” Proceedings of the National Academy of Sciences of the United States of America, vol. 115, no. 49, Dec. 2018, pp. 12435–40.