Summary

On November 12, 2021, conservative influencers began using the hashtag “RacistJoe.” The hashtag ultimately appeared in trending topics on Twitter. Our data analysis strongly suggests that the trend was at least partially the consequence of platform manipulation. Behavior similar to what we observed in the “RacistJoe” data has been reported in the past as an attempt to influence public opinion and media reporting. The heightened engagement brought about through coordinated activity games the algorithm and results in more people seeing the boosted content, sometimes reaching users who do not already follow the tweet authors.

An examination of users retweeting two or more of the RacistJoe-related tweets analyzed by Hoaxlines revealed traits associated with platform manipulation. That the misleading campaign trended on Twitter, drowning out the most relevant voices like that of the President of the Negro Leagues Museum demonstrates how a small group could effectively silence opposition.

At least two users in the dataset appeared to target the username “POTUS.” Targeting the US President may not achieve the goals ordinarily associated with social media harassment, which are “to silence members of civil society, muddy their reputations, and stifle the reach of their messaging,” according to Mozilla Fellow Odanga Madung. Still, Hoaxlines documented the findings for potential research value. Twitter platform manipulation policies already address many problematic behaviors observed by Hoaxlines. Enforcement is inconsistent, leaving people in democratic nations vulnerable to domestic and foreign manipulation alike.

Key findings:

Hoaxlines’ examination of accounts retweeting two or more #RacistJoe-related tweets revealed that many accounts had traits associated with inauthentic accounts. That is, an online entity (a social media account or a website) misleading about who it is or what it aims to achieve. Inauthentic behavior and platform manipulation do not necessarily indicate that activity is automated.

Users that retweeted more than one of the #RacistJoe-related tweets have some or all of the following: blank profiles, formulaic handles, recently created profiles, dormant accounts, no identifiable information, and a narrow range of interests not found with genuine accounts.

Multiple users retweeting two or more of the RacistJoe-related content tweeted at a rate that Oxford Internet Institute’s Computational Propaganda project (averaging over 55 tweets per day) and the Digital Forensics Lab (averaging over 75 tweets per day) have both classified as indicative of inauthentic activity, like tweeting as many as 1000 times per day or 150 times per hour.

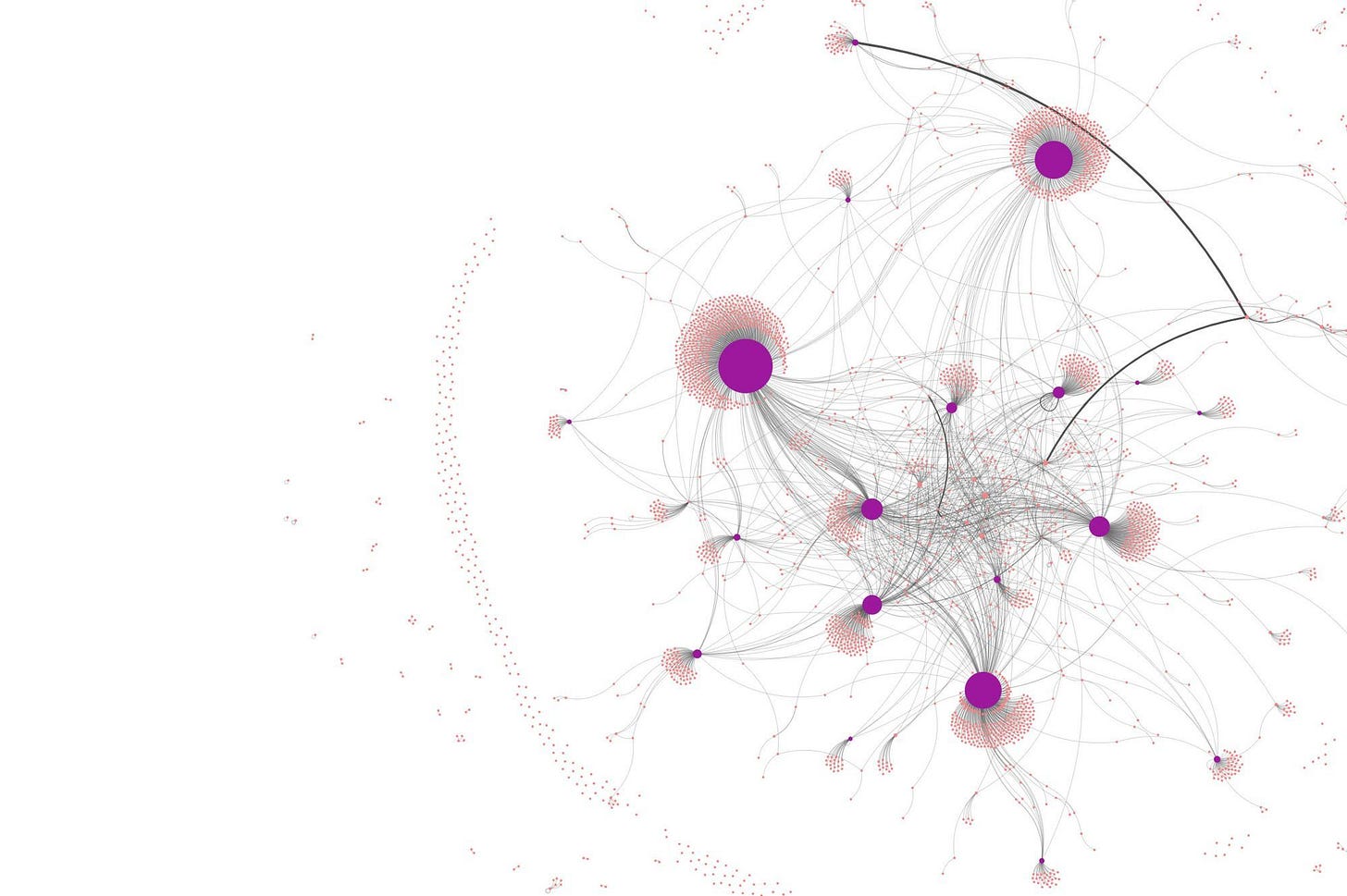

Data visualization of the network revealed a formation seen in past inauthentic campaigns. It has been used to boost content across time and space.

A group of 646 users who retweeted two or more #RacistJoe-related tweets had creation dates that skewed toward 2020 and 2021, something common to information operations regardless of who is behind them.

The activity in the #RacistJoe dataset remained concentrated around a handful of tweets as the network grew larger, which conflicts with what we expect from a trending topic that takes off naturally. A landmark social bot study in Nature (2018) stated: “… as a story reaches a broader audience organically, the contribution of any individual account or group of accounts should matter less.”